Abstract:

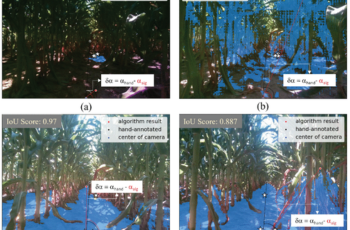

Agricultural robots have the potential to increase productivity, save labor, and optimize resources. However, navigating these robots remains challenging due to the intricate and dynamic nature of agricultural landscapes. Traditional image processing techniques often fall short in large-scale applications due to varying environmental conditions. To address this challenge, our paper introduces a novel in-field RGB image processing methodology centered on row detection and navigation. Using the state-of-the-art foundation model, Segment Anything Model (SAM), as our backbone, we harness vegetation index values extracted from excess green segmentation as prompts. This assists the SAM model in generating refined masks tailored for robot navigation within rows. Evaluation conducted on 50 test images showcased a navigation angle error chiefly between −5.72° and 8.72°. However, 8% of the tests displayed errors spanning from 19° to 48°. The overall root mean square error (RMSE) of the algorithm was 9.78°. To counter these significant angular discrepancies, we advocate for the integration of finite-state machine algorithms. Our research offers a promising avenue for more robust row detection and navigation techniques in agricultural robotics.

Published in: 2023 IEEE India Geoscience and Remote Sensing Symposium (InGARSS)